In a

previous post I described a way to keep your Syncthing installation on a Synology DM1812+ up-to-date as the package from the

Cytec repository lags significantly behind the current version.

This method is no longer feasible. The main reason is that the current builds of Syncthing have become dynamically linked, i.e. Syncthing uses certain libraries located on the host machine. If these are incompatible (like the ones on the Diskstation)

strange things happen.

Until recently these libraries were part of the Syncthing binary. This so called statically linked binary had no external references and incompatible libraries on the host machine weren't a problem.

If you use the upgrade mechanism built-into Syncthing you get the dynamically linked version. This works with most machines, but not with the DM1812+.

You have to build a statically linked version yourself and replace the binary on Diskstation manually. Fortunately this isn't so hard.

If you haven't done so already, install Syncthing from the Cytek repository. This will copy all the other necessary scripts for the Syncthing integration onto the Diskstation, e.g. scripts to start and stop Syncthing via the Synology web GUI.

The following paragraphs describe how to compile Syncthing amd64 on a fresh Ubuntu LTS 14.04 amd64 machine. It mostly repeats the steps found

here on the Syncthing site, but with additional information on how to get a statically linked binary.

The CPU running in the Diskstation is amd64 compatible, but it can also run 32 bit binaries. By tweaking the environment variable GOARCH, it should be possible to cross-compile it for other architectures. I haven't tried this.

Syncthing is written in Go. In order to compile Syncthing you first have to compile Go (also as statically linked version).

Prerequisites

You only need (apart from the source code) the Ubuntu packages for

git and

mercurial.

I suggest to install an additional packages:

libc-bin. It contains the utility

ldd which can be used to check if a binary is indeed not a dynamic executable.

$ sudo apt-get install git mercurial libc-bin

Compiling Go

First get the source code for the go language. Currently Syncthing requires Go 1.3, which can be found on the golang

website. There you also find the source code for other versions and architectures, if needed.

Download it:

$ wget https://storage.googleapis.com/golang/go1.3.linux-amd64.tar.gz

The Go source code wants to live in

/usr/local/go and Syncthing expects it there. You need root privileges to create this folder; then change permissions so that you have access to it as a normal user (in this example “mike” - change as required).

$ sudo mkdir -p /usr/local/go

$ sudo chown mike:mike /usr/local/go

Unpack the source into /usr/local/go

$ tar -C /usr/local -xzf go1.3.linux-amd64.tar.gz

Set the following environment variables

$ export PATH=$PATH:/usr/local/go/bin

$ export GOOS=linux

$ export GOARCH=amd64

$ export CGO_ENABLED=0

CGO_ENABLED=0 is responsible to get statically linked binaries.

Start compiling

$ cd /usr/local/go/src

$ ./all.bash

This can take a while.

Check if the resulting binary is indeed statically linked

$ ldd /usr/local/go/bin/go

not a dynamic executable

(German message:

Das Programm ist nicht dynamisch gelinkt )

If you get something that looks like the following, the binary is dynamically linked

$ ldd /usr/local/go/bin/go

linux-vdso.so.1 => (0x00007fff0ed31000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f4cf9726000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f4cf9360000)

/lib64/ld-linux-x86-64.so.2 (0x00007f4cf9966000)

Do only continue if you have the statically linked version.

Check Go

$ go version

go version go1.3 linux/amd64

Compiling Syncthing

Create a directory for the syncthing source (the example shows its preferred location) and get the source:

$ mkdir -p ~/src/github.com/syncthing

$ cd ~/src/github.com/syncthing

$ git clone https://github.com/syncthing/syncthing

Compile it

$ cd syncthing

$ go run build.go

This doesn't take long.

Check it

$ cd bin

$ ldd syncthing

not a dynamic executable

This is the statically linked binary we need.

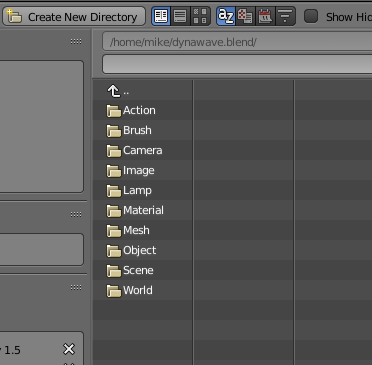

This file has to be copied to the Diskstation. If you have installed it in its default location (

volume1), the Syncthing binary is located at:

/volume1/@appstore/syncthing/bin/syncthing

Stop the Syncthing process via the Synology web GUI, rename the old one (just in case), and copy the new one to that location. Restart the process in the GUI.

You're good to sync.